What Is Ferret AI

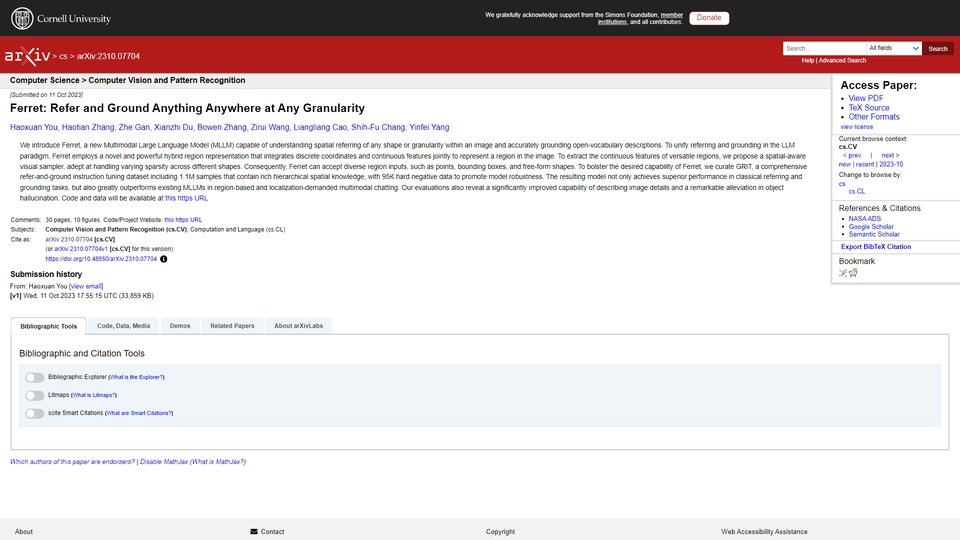

Ferret is an innovative multimodal large language model (MLLM) developed by Apple, designed to bridge the gap between image understanding and language processing. By integrating these two capabilities, Ferret addresses the longstanding challenge of accurately interpreting and processing spatial references within visual and textual data. This development positions it uniquely in the AI landscape, particularly for applications requiring nuanced comprehension of visual environments and related linguistic contexts.

The core technology behind Ferret allows it to excel in processing complex visual scenes and correlating these with corresponding language elements. This leads to more accurate and context-aware interactions, paving the way for advancements in fields such as augmented reality, autonomous navigation, and intelligent assistance. By effectively managing the interplay between visual data and language, Ferret enhances AI's ability to interact with real-world tasks that require spatial awareness and sophisticated context interpretation.

Industries like robotics, virtual reality, and smart devices will likely find Ferret particularly beneficial. Its potential to improve user interaction experiences and enhance system autonomy makes it a significant advancement for developers and businesses looking to leverage AI's capabilities in applications where understanding the physical environment is crucial. Through Ferret, Apple continues to push the boundaries of what's possible with AI, offering a powerful tool for those striving to create more intuitive and intelligent systems.

Ferret AI Features

The Ferret AI is a multimodal large language model (MLLM) from Apple that is distinguished by its dual capabilities in image understanding and language processing. Below are some of its key features:

Core Functionalities

Ferret's core functionality is its ability to process and understand both visual and textual inputs seamlessly. This multimodal approach allows:

- Image understanding: Ferret excels in interpreting and analyzing various types of images, extracting meaningful insights and identifying key elements.

- Language processing: The model is equipped to handle natural language with high proficiency, making sense of complex linguistic inputs and generating coherent responses.

Unique Selling Points

One of the most notable features of Ferret is its exceptional performance in understanding spatial references. Its advanced algorithms enable it to:

- Comprehend spatial arrangements within images.

- Bridge the gap between visual content and textual descriptions, making it particularly useful for applications requiring cross-modal understanding.

Key Advantages Over Competitors

Ferret's integration of advanced image and language processing capabilities sets it apart from other MLLMs. Some advantages include:

- Enhanced performance in tasks requiring the integration of visual and textual data.

- Superior ability to handle and interpret spatial references, making it a standout choice for industries that heavily rely on accurate spatial understanding.

Target Audience and Use Cases

Ferret is designed to cater to a broad range of users, from tech developers to businesses needing advanced AI solutions. It is particularly beneficial in:

- Industries like autonomous vehicles, where image and spatial data processing is critical.

- Content creation and analysis where multimodal content needs to be processed and understood seamlessly.

By uniquely combining image and language capabilities, Ferret offers users a robust tool for a multitude of AI-related applications.

Ferret AI FAQs

Ferret AI Frequently Asked Questions

What is a multimodal large language model (MLLM)?

A multimodal large language model (MLLM) is an advanced AI system that combines capabilities in both language processing and image understanding. It can interpret and generate human language while also analyzing visual data to provide comprehensive insights.

How does Apple's MLLM improve image understanding?

Apple's MLLM enhances image understanding by integrating sophisticated algorithms that allow the model to recognize and contextualize visual content accurately. This includes identifying objects, scenes, and spatial references, making it useful for applications that require visual comprehension.

What are the benefits of using a multimodal language model?

The primary benefits of using a multimodal language model include the ability to handle complex tasks involving text and images, improve efficiency in image-laden content analysis, enhance user interaction through better context understanding, and facilitate smoother communication in applications like virtual assistants and content creation tools.